Helen Rosner for The New Yorker wrote a piece on 15th July about “Roadrunner” – the new documentary about legendary food writer Anthony Bourdain. In it, the director Morgan Neville revealed that 45 seconds of the audio featuring Bourdain’s voice narrating a personal email written to his close friend David Choe, was created by an AI model. The information, delivered as an offhand answer in response to Rosner’s question as to how he came to have a recording of one of Bourdain’s personal emails, has since been at the heart of a debate about the ethics of using AI to recreate voices. Broadly, voices feeding into the debate fall into three distinct camps:

1. Those who don’t see it as problematic

The main thrust of the argument here is that 100,000 hours of footage of Bourdain was available, with a lot of it in the public domain. To recreate the 45 seconds of audio and using AI, ten hours of the food writer’s voice was fed into the model. This camp also focuses on the fact that the AI narrates actual words written by Bourdain in an email exchange with his close friend David Choe. And lastly – that the use of AI voices is an inevitable evolution of storytelling techniques. More on that below.

2. Those who see the use of AI as an evolution of storytelling techniques

In his statement following the eruption of opinions on social media on his use of AI, director Morgan Neville said: “it was a modern storytelling technique that I used in a few places where I thought it was important to make Tony’s words come alive.” A position supported by people citing the many examples in documentary and entertainment history when actors have been used to recreate the voices of non-fictional subjects, for example in the 1988 documentary “The Thin Blue Line” by Errol Morris. Critics of this argument say that unlike in “Roadrunner,” historically the context of the films/documentaries made it clear when the voices were not those of the actual subject.

3. Those who fear the impact of a lack of regulation around the use of Deepfake voices

The counterpart to the ‘evolution of storytelling techniques’ argument is that ostensibly innocuous, fleeting uses of AI voices, like that used in “Roadrunner”, in fact signals the beginning of a slippery slope in which the technology can be used to spread misinformation or to prop up a specific viewpoint.

This camp is concerned with issues of consent and disclosure, as well as the intersection of a creative’s (a director or otherwise) intent and the use of new technology.

And on the topic of consent…

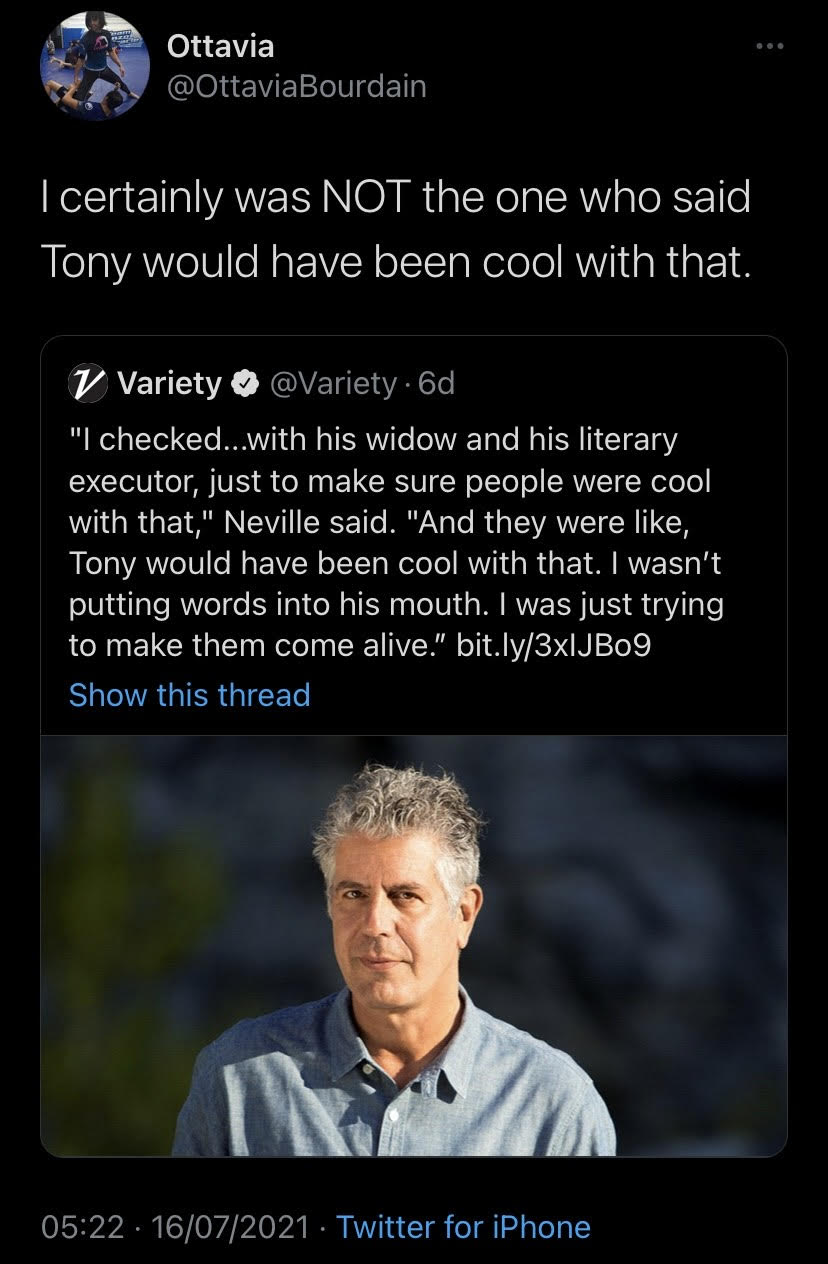

Morgan Neville’s claim that he “checked, you know, with his widow and his literary executor, just to make sure people were cool with that. And they were like, Tony would have been cool with that,” was later disputed by Bourdain’s ex-wife, Ottavia Busia.

4. Those who view the lack of disclosure as unethical

In his interview with Helen Rosner, Neville is quoted as saying, ‘“If you watch the film, other than that line you mentioned, you probably don’t know what the other lines are that were spoken by the A.I., and you’re not going to know,” Neville said. “We can have a documentary-ethics panel about it later.”

Two things about this have drawn complaints from this camp. Firstly that by failing to disclose the use of AI, the ethics of doing so were conveniently bypassed. The implication being creative license is a reason for the deferral of ethics. The potential ramifications of which are captured by The New York Times in a quote from Academy Award-winning documentary filmmaker Mark Jonathan Harris: “If viewers begin doubting the veracity of what they’ve heard, then they’ll question everything about the film they’re viewing.” The fear here is that the post-truth world can be perpetuated by a lack of transparency around the role of technology in fabrication.

**

It’s an interesting and necessary debate with transparency really at the heart of all viewpoints. Disclosure, or in this case the lack of it, impacts the audience’s perception of the intention behind the use of technology. The furore around “Roadrunner” shows the importance of disclosure in the legislation of how the technology is used creatively.

AI or no AI, any kind of storytelling, even documentary, is coloured by the vision and judgement of the director and that inevitably comes with a level of distortion. If deeper ethical consideration can limit the use of technology as a tool for inadvertently confirming unavoidable bias, then it should.