At Papercup, our mission has always been clear: make the world’s videos watchable in any language. We’re not just aiming for translation; we’re revolutionizing how voices are dubbed, creating synthetic voices so realistic that they’re indistinguishable from human speech.

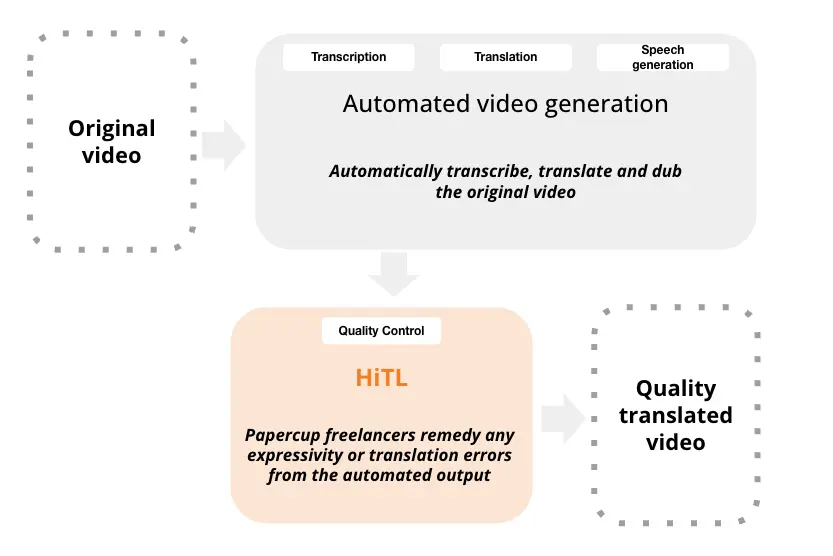

To deliver premium video content, we’ve implemented a human-in-the-loop system. This approach blends the scalability of AI with the precision of professional translators, who use their deep domain knowledge to ensure that every nuance and subtlety of the original content is preserved. For instance, translating the nuanced language of poker requires specific expertise to capture the true essence of the game in another language.

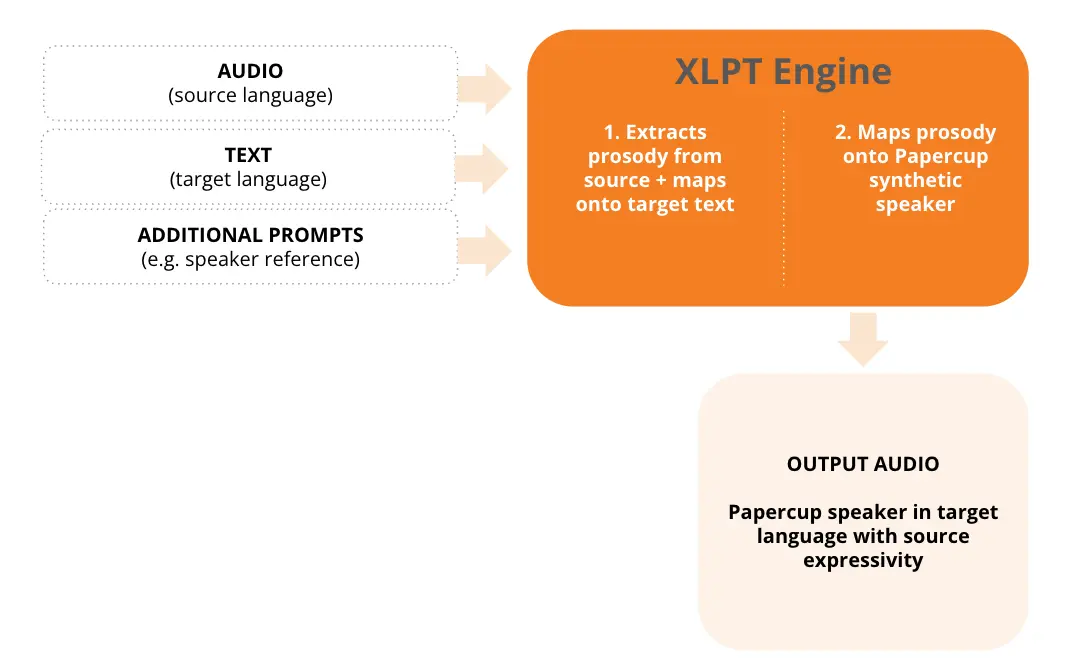

But to truly scale our mission, we needed a technological breakthrough. Today, we’re excited to introduce the market’s first system, which can translate, map, and control emotion from one language to another automatically. Let’s dive into how this works.

The basic AI dubbing model overview

Our system processes a source video by first generating a script based on the speakers on screen, then translating it, and finally creating synthetic speech. Professional translators (HiTL) then fine-tune this output. But our latest breakthrough takes this a step further by enabling the seamless transfer of emotion, something never before achieved in a scalable system.

Automated solutions have historically struggled with accurately mapping prosody and emotion from one language to another. Manual adjustments or voice actors are often needed to drive the emotion in a synthetic voice, making these solutions either unscalable or unsuitable for premium content.

Introducing XLPT (cross-lingual prosody transfer)

You might be wondering, what exactly is prosody? Prosody refers to the patterns of stress and intonation in speech—essentially, the rhythm and melody that convey the true context and emotion behind words.

Read: Teaching computers to speak: the prosody problem to learn more about prosody.

Tl;dr the way you say something changes the meaning of it. Example: “That’s interesting” can be said sarcastically or genuinely. Some things you can play with to change prosody:

-

Emphasizing certain words

-

How quickly/slowly you speak

-

Putting pauses for dramatic effect

-

Yelling vs speaking at a normal volume vs whispering

-

Laughing while talking

Our new XLPT system changes the game

- Automatically mapping expressivity from the source audio without the need for human intervention

- Training custom models on customer data for enhanced expressivity

- Enhanced levels of control over emotion, intonation, and tone where needed

This leaves us with the first in-production AI voice system that can automatically translate and map emotion from one language to another, capturing the true essence of the content and all the nuances of language at a scale never before seen.

In this video, you can see our advanced AI automatically translating emotion, intonation, and utterances from the source speech into another language without human intervention.

With our XLPT system, we are not just translating words—we are translating emotions, making every piece of content as engaging and impactful as the original. This is the future of AI dubbing, and it’s here now.

If you’d like to see this in action, simply book a demo with one of our consultants.