Artificial intelligenceMeta

Meta AI Bug Exposed Private User Chats: Now Fixed

Abdullah Mustapha

July 16, 2025

Image credit: Shutterstock

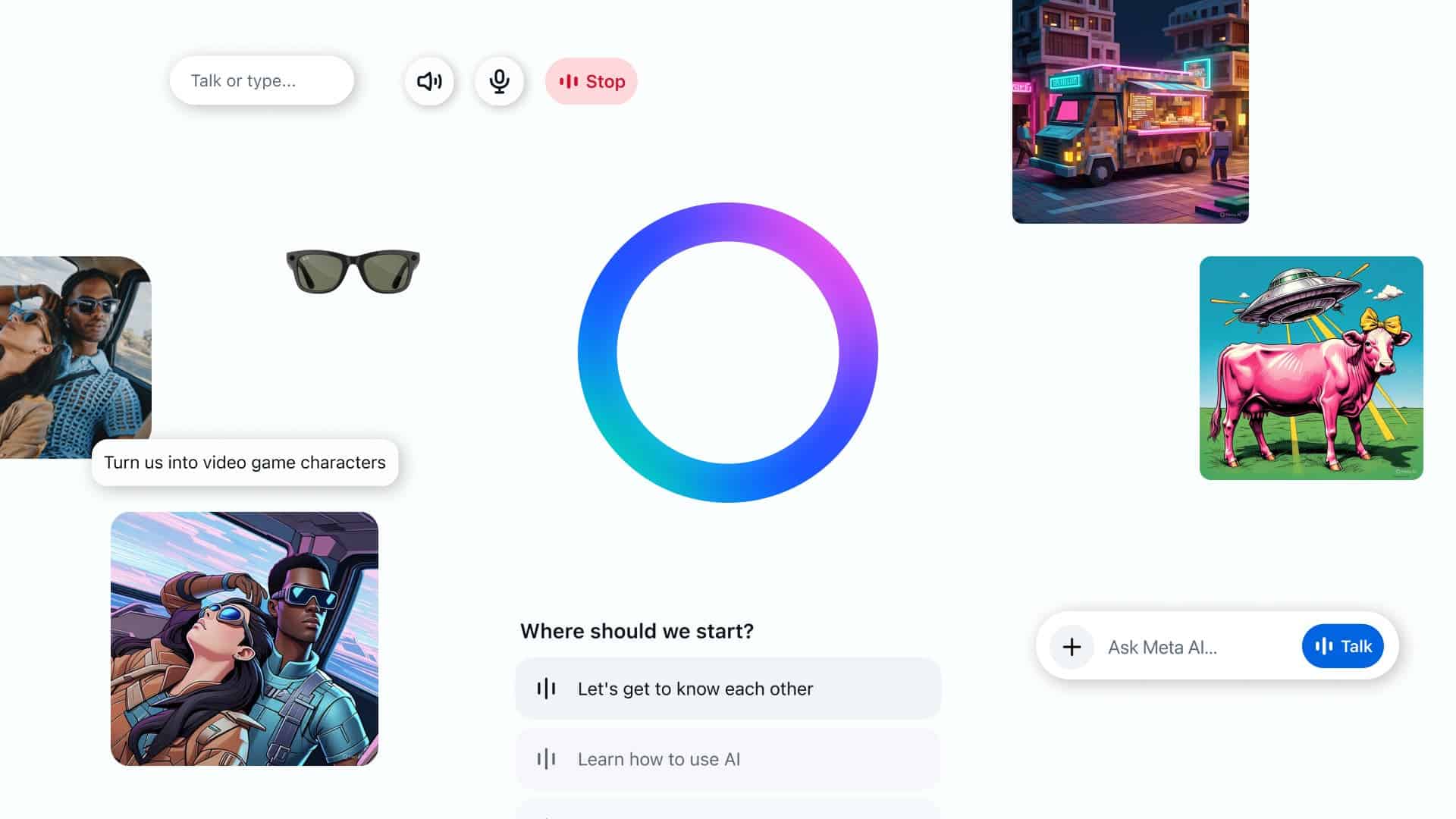

Meta has patched a major security flaw in its Meta AI chatbot. The bug allowed users to see private prompts and AI-generated responses from other users.

Meta Fixes Security Bug That Exposed Private Meta AI Conversations

Sandeep Hodkasia, founder of the cybersecurity firm AppSecure, discovered the flaw. He reported it to Meta on December 26, 2024, and received a $10,000 bug bounty for the private disclosure. Meta fixed the issue nearly a month later, on January 24, 2025.

According to Hodkasia, the bug relates to how Meta AI handled prompt editing. Logged-in users can edit previous prompts to regenerate new text or images. When a prompt is edited, Meta’s servers assign it a unique ID number. While analyzing his browser’s network activity, Hodkasia found that he could change this ID. Doing so returned prompts and responses from other users—without authorization.

Privacy Breach in Meta AI Revealed by Researcher

The root issue was poor access control. Meta’s servers failed to confirm if a user was allowed to view a specific prompt. Hodkasia said the ID numbers were simple and predictable. This made it possible for someone to guess them or use automated tools to access other users’ data.

Meta confirmed the bug was fixed in January. A company spokesperson, Ryan Daniels, told TechCrunch, “We found no evidence of abuse and rewarded the researcher.”

Although no one exploited the flaw in the wild, it highlights the risks of fast-moving AI development. Tech companies are in a race to launch AI tools. But many are still ignoring serious privacy and security problems.

The Meta AI app, launched earlier this year, was meant to compete with popular tools like ChatGPT. However, its rollout faced problems from the start. Some users accidentally made their private chats public. Now, this bug further raises concerns about the platform’s ability to protect user data.

Security experts say incidents like this stress the need for rigorous testing before releasing AI tools. Hodkasia’s discovery shows how simple mistakes in code can expose sensitive data.

While Meta responded quickly in this case, ongoing vigilance is necessary. As AI apps become more common, the cost of security lapses could become much higher.

Disclaimer: We may be compensated by some of the companies whose products we talk about, but our articles and reviews are always our honest opinions. For more details, you can check out our editorial guidelines and learn about how we use affiliate links.Follow Gizchina.com on Google News for news and updates in the technology sector.

Source/VIA :

Techcrunch