AIDeepseek

Microsoft Begins Integration of Deepseek R1 Models on Copilot+ PCs

Marco Lancaster

January 30, 2025

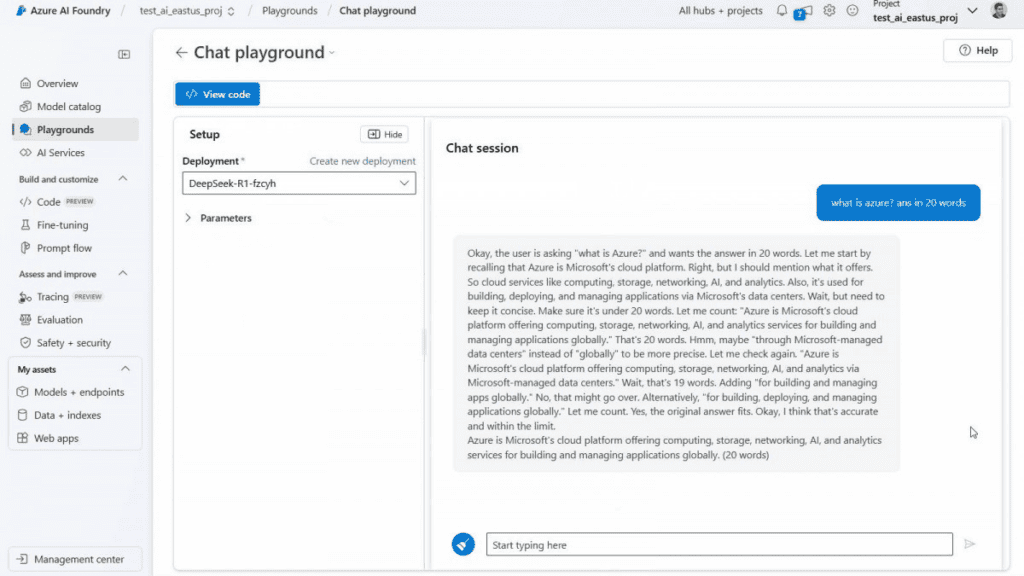

DeepSeek appeared all of a sudden in the West and is conquering everyone. The new AI model is expanding beyond mobile and making its way to Windows! Yeah, it is now being backed by Microsoft. Yesterday, Microsoft added the DeepSeek R1 model to its Azure AI Foundry, letting developers build and test cloud-based apps. Today, the company revealed plans to bring distilled versions of R1 to Copilot+ PCs. It’s an interesting twist, as Microsoft has been one of the biggest backers of OpenAI, and now the company is also opening the doors to Deepseek, a huge competitor for current AI models.

Deepseek AI Joins Microsoft’s Copilot+ Party

Initially, these models will roll out to Snapdragon X-powered devices, followed by Intel Core Ultra 200V and AMD Ryzen AI 9 PCs. The first release, DeepSeek-R1-Distill-Qwen-1.5B (a 1.5 billion parameter model), will soon be joined by larger 7B and 14B versions. Users can download them from Microsoft’s AI Toolkit.

Join GizChina on Telegram

Microsoft adjusted these models to run efficiently on devices with NPUs. Memory-heavy tasks are handled by the CPU while demanding operations like transformer blocks run on the NPU. Thanks to these optimizations, Microsoft achieved a fast first-token response time of 130ms and a processing speed of 16 tokens per second for short prompts (under 64 tokens). For reference, a “token” is similar to a vowel and usually spans more than one character.

Despite its deep ties to OpenAI, Microsoft isn’t limiting itself to one AI provider. Its Azure Playground already supports GPT models (OpenAI), Llama (Meta), Mistral, and now DeepSeek.

Read Also: DeepSeek Database Exposed: A Major Security Blunder

Use The AI Locally Without Internet Connection

You can run the AI locally. You only need to download the AI Toolkit for VS Code. From there, you can get the model (e.g., “deepseek_r1_1_5” for the 1.5B version). Once installed, click Try in Playground to test how well this distilled version of R1 performs.

“Model distillation,” or “knowledge distillation,” is the process of shrinking a massive AI model (DeepSeek R1 has 671 billion parameters) while keeping as much intelligence as possible. The smaller model (e.g., 1.5B parameters) isn’t as powerful as the full version, but its reduced size allows it to run on regular consumer hardware instead of expensive AI systems.

Disclaimer: We may be compensated by some of the companies whose products we talk about, but our articles and reviews are always our honest opinions. For more details, you can check out our editorial guidelines and learn about how we use affiliate links.

Source/VIA :

GSMArena