HonornewsTech

HONOR to Launch Global AI-Powered Deepfake Detection in April 2025

Nick Papanikolopoulos

February 21, 2025

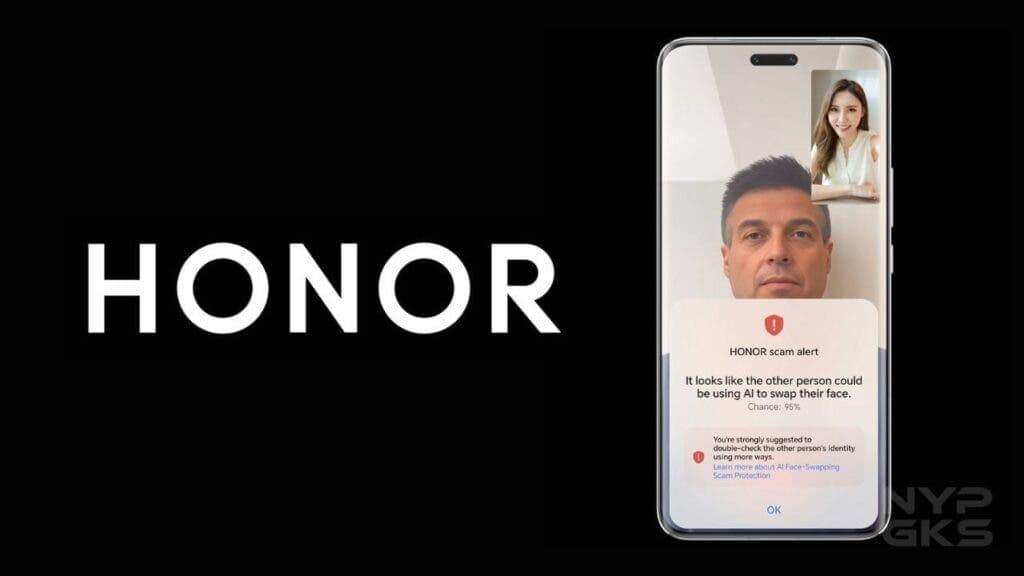

HONOR will launch its AI Deepfake Detection tool globally in April 2025 but we will know more during this year’s MWC 2025. This system helps people spot fake images and videos by sending alerts when content has been altered. The goal is to improve online safety.

How HONOR AI Deepfake Detection Works

First shown at IFA 2024, the AI scans media for signs of tampering. It looks for:

- Blurry Pixels – Fake images often have strange textures and pixelation.

- Odd Borders – Edges of faces or objects may look unnatural.

- Glitches in Videos – Frames might not match up correctly.

- Unnatural Faces – The AI finds misplaced features and odd proportions.

If fake content is detected, the system warns users immediately.

Why Deepfakes Are a Problem

AI has changed many industries, but it has also increased cyber threats. A 2024 Entrust Cybersecurity Institute report found that deepfake scams happen every five minutes. A Deloitte study in 2024 showed that 59% of users struggle to tell real from fake media. Also, 84% of people want clear labels on digital content. HONOR believes better tools and teamwork across industries are needed to fight these risks.

Groups like the Content Provenance and Authenticity (C2PA) are working on rules to verify AI-generated media.

Risks for Businesses

Deepfake scams are rising fast. Between November 2023 and November 2024, 49% of companies reported deepfake-related fraud—an increase of 244%. Still, 61% of business leaders have not taken action.

Join GizChina on Telegram

Biggest risks include:

- Fraud & Identity Theft – Criminals use deepfakes to pretend to be real people.

- Corporate Espionage – Fake media can spread false information and harm businesses.

- Misinformation – Deepfakes can mislead the public and influence opinions.

Read Also: Elon Musk’s xAI Unveils Grok-3: A Major Leap in AI Capabilities

How Companies Are Fighting Back

To stop deepfake fraud, businesses are using:

- AI Detection Tools – HONOR’s system spots unnatural eye movement, lighting issues, and playback errors.

- Industry Cooperation – Groups like C2PA, supported by Adobe, Microsoft, and Intel, work on verification standards.

- Built-in Protection – Qualcomm’s Snapdragon X Elite uses AI to detect deepfakes on mobile devices.

The Future of Deepfake Security

The deepfake detection market will grow 42% each year, reaching $15.7 billion by 2026. As deepfakes improve, people and businesses must use AI security tools, laws, and awareness programs to reduce risks.

UNIDO Warns About Deepfakes

Marco Kamiya from the United Nations Industrial Development Organization (UNIDO) said deepfakes put personal data at risk. Sensitive information—like locations, payments, and passwords—can be stolen.

Kamiya stressed that mobile devices, which store important data, are top targets for hackers. Protecting them is key to preventing scams and identity theft.

He explained:

“AI Deepfake Detection on mobile devices is crucial for online safety. It spots details people might miss, like unnatural eye movement, lighting errors, and video glitches. This technology helps individuals, businesses, and industries stay secure.”

HONOR emphasized the importance of deepfake detection. In a world where “seeing is no longer believing,” businesses must focus on security to protect their customers and reputation.

Disclaimer: We may be compensated by some of the companies whose products we talk about, but our articles and reviews are always our honest opinions. For more details, you can check out our editorial guidelines and learn about how we use affiliate links.